Part One: Choosing the Right Algo Trading Platform Is Not a Technical Decision

One of the most consequential decisions an algorithmic trader will ever make has nothing to do with indicators, models, or markets.

It is the choice of platform.

Your platform is not just software. It is the environment in which every idea is tested, refined, deployed, and either validated or destroyed by the market. It determines how quickly you can move from concept to execution, how realistically your strategies are tested, and how much friction exists between research and live trading.

In other words, your platform quietly defines your operational edge long before your first trade is placed.

At some point, every serious trader asks the same question. Should I use an existing platform, or should I build my own?

That question sounds rational. In many cases, it is a trap.

The build-your-own illusion

For technically inclined traders, especially those with development experience, building an internal trading stack feels natural. Familiar. Even responsible. Why rely on someone else’s tools when you could design exactly what you want?

The problem is not capability. The problem is scope.

You are not building “a strategy engine.” You are building a production-grade system that must simulate markets accurately, manage risk consistently, route and execute orders reliably, and stay operational under stress. That includes backtesting infrastructure, live execution logic, brokerage and exchange integrations, failure handling, monitoring, and long-term maintenance.

This is not a side project. It is an infrastructure company inside your trading operation.

What usually happens is predictable. Months are spent solving engineering problems that have nothing to do with finding market edges. Energy shifts from research to architecture. Progress feels real because code is being written, but capital is not being deployed and strategies are not being validated.

Unless there is a clear, unavoidable reason to build, doing so early almost always delays the only thing that matters: developing and proving profitable strategies in live markets.

The reality most traders underestimate

If you are trading systematically without a full institutional team behind you, you are performing multiple roles at once.

You are a trader, identifying opportunities and managing exposure.

You are a researcher, designing hypotheses, testing assumptions, and validating edge.

You are a developer, translating ideas into production-ready systems.

Each of those roles demands focus. Together, they compete for the same limited cognitive and time resources.

This is where platform choice becomes decisive. A well-designed platform acts as leverage. It absorbs complexity so that your effort compounds where it matters most. A poorly chosen platform does the opposite. It consumes attention, creates friction, and slowly shifts your time away from research and decision-making into maintenance and workaround building.

Choosing an established platform is not a shortcut. It is an allocation decision. You are deliberately outsourcing infrastructure so you can concentrate on strategy and execution.

When engineering becomes avoidance

There is a more subtle risk that affects many technically strong traders.

When trading decisions become uncertain or uncomfortable, it is tempting to retreat into engineering. Code is deterministic. The architecture is clean. Refactoring feels productive. Research, by contrast, involves ambiguity, false starts, and uncomfortable conclusions.

So instead of questioning exits, capital allocation, or portfolio interaction, traders polish tooling. They rebuild data pipelines. They optimize logging. They redesign systems that are already “good enough.”

The result is activity without progress.

Markets do not reward elegant systems. They reward robust portfolios that survive real conditions. Infrastructure exists to support that outcome, not to become the outcome itself.

A platform should reduce the temptation to hide in engineering by making the right work easier than the distracting work.

Tools should remove noise, not create it

For independent traders and small teams, the ideal platform does three things exceptionally well.

It handles infrastructure reliably so you do not have to reinvent it.

It provides stable execution, portfolio management, and risk tooling out of the box.

It keeps your attention focused on research, testing, and deployment rather than operational overhead.

The same principle applies to market data. High-quality, institutional-grade data exists specifically to eliminate the need for constant ingestion fixes, roll adjustments, and gap management. Data governance should not be your competitive differentiator. Strategy should be.

When tools do their job properly, they fade into the background. That is not a weakness. That is the point.

The real objective

Early in an algorithmic trading journey, the objective is not to build the perfect system. It is to shorten the distance between idea and live validation.

Find edges. Test them realistically. Deploy them responsibly.

Code exists to serve that mission. When code becomes the mission, priorities have already drifted.

In the next section, we’ll define what actually separates a serious algo trading platform from a toy, and which capabilities matter if your goal is to trade professionally rather than experiment indefinitely.

Part Two: The Standards Serious Algo Traders Actually Require

Before comparing platforms or debating preferences, there is a more important step that most traders skip.

You need standards.

Not marketing standards. Not feature grids pulled from landing pages. Real operational requirements that determine whether a platform can support serious algorithmic trading or whether it will quietly fail when scale, complexity, or real capital enter the picture.

The difference between a toy and a professional tool is not one feature. It is a pattern.

Platform neutrality is foundational

A serious algo trading platform cannot lock you into a single broker, exchange, or data provider.

Venue neutrality means freedom of choice. Freedom to source data from one provider and execute through another. Freedom to move when costs change, when reliability degrades, or when strategy requirements evolve.

The moment a platform dictates where you trade or how you access markets, it stops serving you and starts shaping your decisions. That is not a technical inconvenience. It is a strategic liability.

Power without constant friction

Many platforms force a false tradeoff.

Either the system is simple and accessible but quickly becomes limiting, or it is powerful and flexible but demands constant engineering attention.

A great platform avoids this trap. It stays out of the way during rapid iteration, yet does not block progress as strategies grow more sophisticated. Complexity should be available when needed, not imposed by default.

The best tools scale with ambition instead of forcing a platform migration mid-journey.

Multi-asset support is no longer optional

Modern systematic trading is not confined to a single market.

Equities, futures, forex, and crypto are interconnected through volatility, liquidity, and capital flows. A platform that forces strategies into single-asset silos restricts diversification and opportunity before trading even begins.

If research, testing, and exposure management cannot happen across asset classes within a unified framework, portfolio construction is artificially constrained from day one.

Backtesting must resemble reality

Backtesting exists to remove illusions, not to create them.

A credible engine must be event-driven and high-fidelity. It must respect market structure, session rules, order handling, and execution mechanics. Latency, sequencing, and fill logic matter.

If results look unrealistically smooth or consistently outperform intuition, the issue is rarely alpha. It is usually the simulator.

Portfolio-level thinking separates professionals from experimenters

Real trading happens at the portfolio level, not the strategy level.

Strategies interact. They share capital. They amplify and dampen drawdowns. They fail and recover on different schedules. Platforms that only allow isolated strategy testing force traders into artificial workflows and post-processing gymnastics.

Native support for running multiple independent strategies as a single portfolio is one of the clearest indicators that a platform was designed for real-world trading rather than experimentation.

Cost modeling is structural, not optional

Transaction costs are not noise. They define viability.

Slippage, commissions, and fees determine whether a strategy survives outside of a backtest. Ignoring them or modeling them loosely is equivalent to testing a car without friction and assuming the results will transfer to real roads.

If cost modeling is not first-class, results are fiction.

Robustness testing is survival testing

The goal of research is not to explain the past. It is to survive the future.

Parameter sensitivity analysis, Monte Carlo simulations, and walk-forward testing exist to answer a single question: does this strategy still work when conditions change?

Platforms that make robustness testing difficult or optional encourage overfitting by convenience. The more realistic the stress testing, the fewer surprises appear in live trading.

Research and production must be the same code

One of the most dangerous gaps in algorithmic trading is the transition from research to live execution.

If live trading code differs from research code, you are no longer trading what you tested. Every divergence introduces unvalidated risk.

A professional platform allows the same logic to run in simulation and production without rewrites, forks, or conditional hacks. Backtest-to-live parity is not a luxury. It is a safety requirement.

Modern development tools matter more every year

Algorithmic trading is software engineering.

Platforms built on standard languages such as Python or C# integrate naturally with professional tooling, version control, automated testing, and increasingly, AI-assisted development. Proprietary scripting environments age poorly and compound friction over time.

As development workflows evolve, alignment with industry-standard languages becomes a strategic advantage.

Active development and long-term stability

Two qualities matter, and both are required.

A platform must be actively developed, with a clear roadmap and consistent improvement. At the same time, it must be stable under live conditions, where failures are not theoretical.

When real capital is deployed, reliability is not something to admire. It is something to demand.

Which type of platform we actually prefer

Given these standards, preference is not about brand loyalty or feature count. It is about operational philosophy.

We prefer platforms that prioritize execution consistency over feature theatrics. Platforms that reduce engineering overhead rather than create it. Platforms that make portfolio-level research the default instead of an afterthought.

Specifically, the preference is for systems where research and live execution are identical, where cost modeling and robustness testing are standard, where multi-strategy portfolios are native, and where venue choice is never dictated by the software itself.

Standard development languages matter. Modern tooling matters. But stability under real capital matters most.

The right platform is the one that lets strategies move from idea to validation to production with the least unnecessary friction, while still holding up when conditions turn adverse.

Keep these standards in mind as we move into platform comparisons. Features are easy to list. Platforms that consistently meet these requirements under pressure are far rarer.

Part Three: The Platform Landscape and the Real Trade-Offs

Once you understand the standards that matter, the platform landscape becomes easier to interpret.

There is no shortage of algotrading platforms. Some are GUI-driven, some are code-first, some try to split the difference. On paper, many of them look similar. In practice, they behave very differently once you move beyond isolated experiments and start thinking in terms of portfolios, operational reliability, and real capital.

What follows is not a feature comparison. It is a practical look at how different platforms tend to perform once they are used seriously.

TradeStation: mature, approachable, and constrained

TradeStation is one of the oldest names in algorithmic trading. Its longevity matters. Decades of use have made it stable, familiar, and approachable, especially for traders who want to get something running quickly.

That accessibility is also its limitation.

TradeStation is built as a contained ecosystem. Its proprietary scripting language lowers the barrier to entry, but as strategies grow more sophisticated, that same abstraction becomes restrictive. Portfolio-level workflows feel forced, and integration beyond the platform’s boundaries is limited.

TradeStation excels at getting started. It struggles when you want to grow beyond the box it was designed to be.

NinjaTrader: flexible scripting, desktop gravity

NinjaTrader earned its position by serving the retail futures community well. Using C# instead of a proprietary language is a meaningful advantage, and the visual feedback loop between code and charts is productive for many traders.

The trade-off is architectural.

NinjaTrader remains tightly coupled to a Windows desktop model and a GUI-first workflow. As research scales, the platform can become resource-heavy, and native portfolio-level, multi-strategy testing is not its strength. It feels like a powerful charting application with scripting capabilities rather than a portfolio-first research environment.

For traders focused on discretionary workflows augmented by automation, this can be a good fit. For systematic portfolio development, limitations surface quickly.

QuantConnect: institutional depth, developer burden

QuantConnect is built on a professional-grade, event-driven engine and supports a wide range of asset classes and datasets. From a capability standpoint, it is one of the most flexible platforms available.

That flexibility comes with a cost.

QuantConnect is unapologetically developer-first. There is little abstraction from complexity. Visual feedback is minimal, workflows are code-heavy, and meaningful progress requires strong engineering discipline. For teams or individuals who want full control and are willing to own infrastructure decisions, this can be powerful.

For traders trying to compress research cycles and minimize overhead, the learning curve and operational burden are real considerations.

NautilusTrader: performance and control at a price

NautilusTrader is designed for performance, transparency, and architectural clarity. It appeals to experienced developers who care deeply about latency, control, and explicit system design.

It is not designed as an on-ramp.

There is no GUI, documentation is sparse, and the assumption is that users are comfortable navigating a complex codebase. The platform is actively evolving, which brings power but also frequent change. For advanced quants and engineers, this can be an advantage. For most traders, it introduces more setup and maintenance than the edge it delivers.

This is a tool for people who want to engineer trading systems, not just trade them.

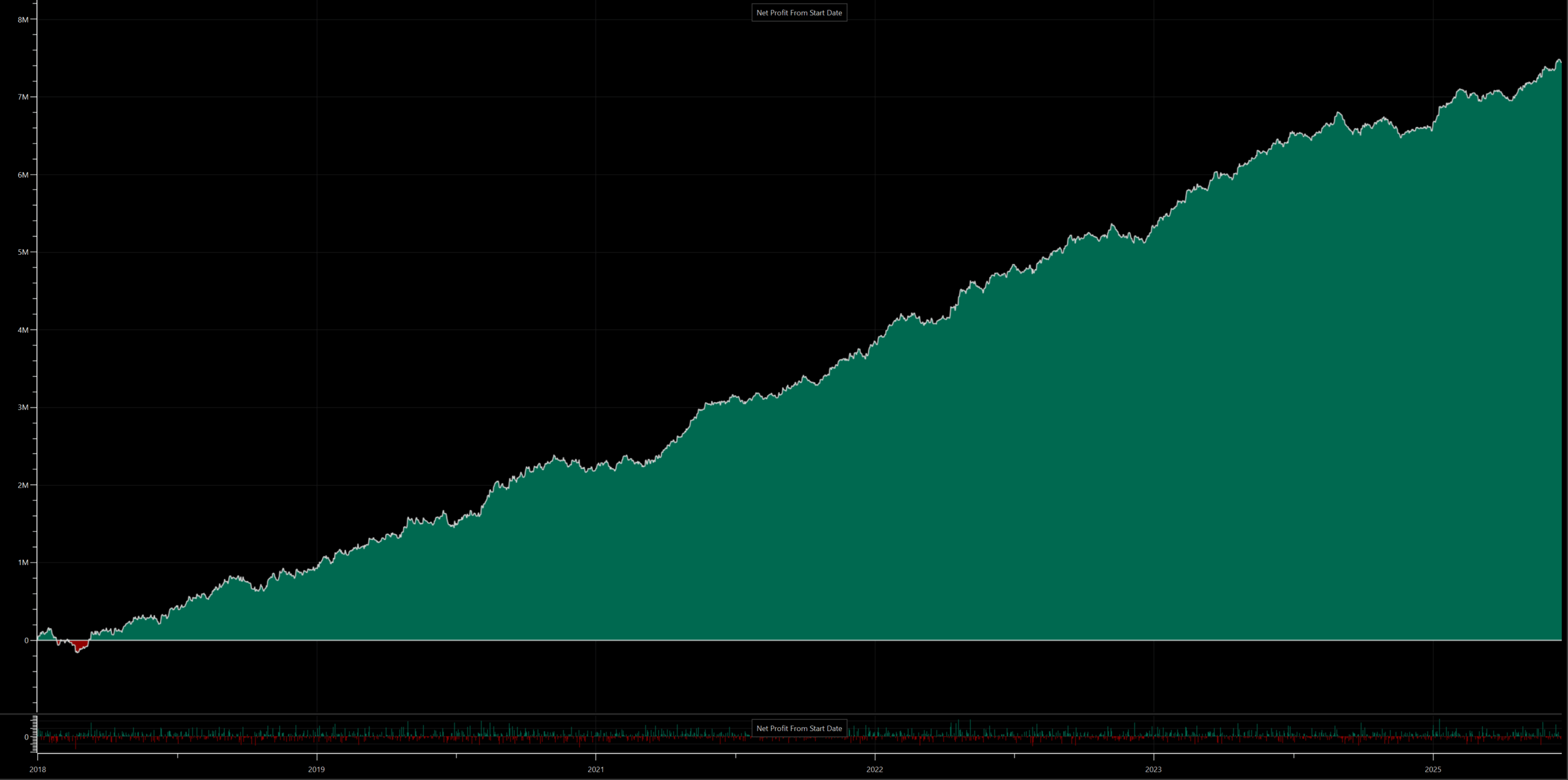

Tickblaze: a hybrid, portfolio-first approach

Tickblaze occupies a different position in the landscape.

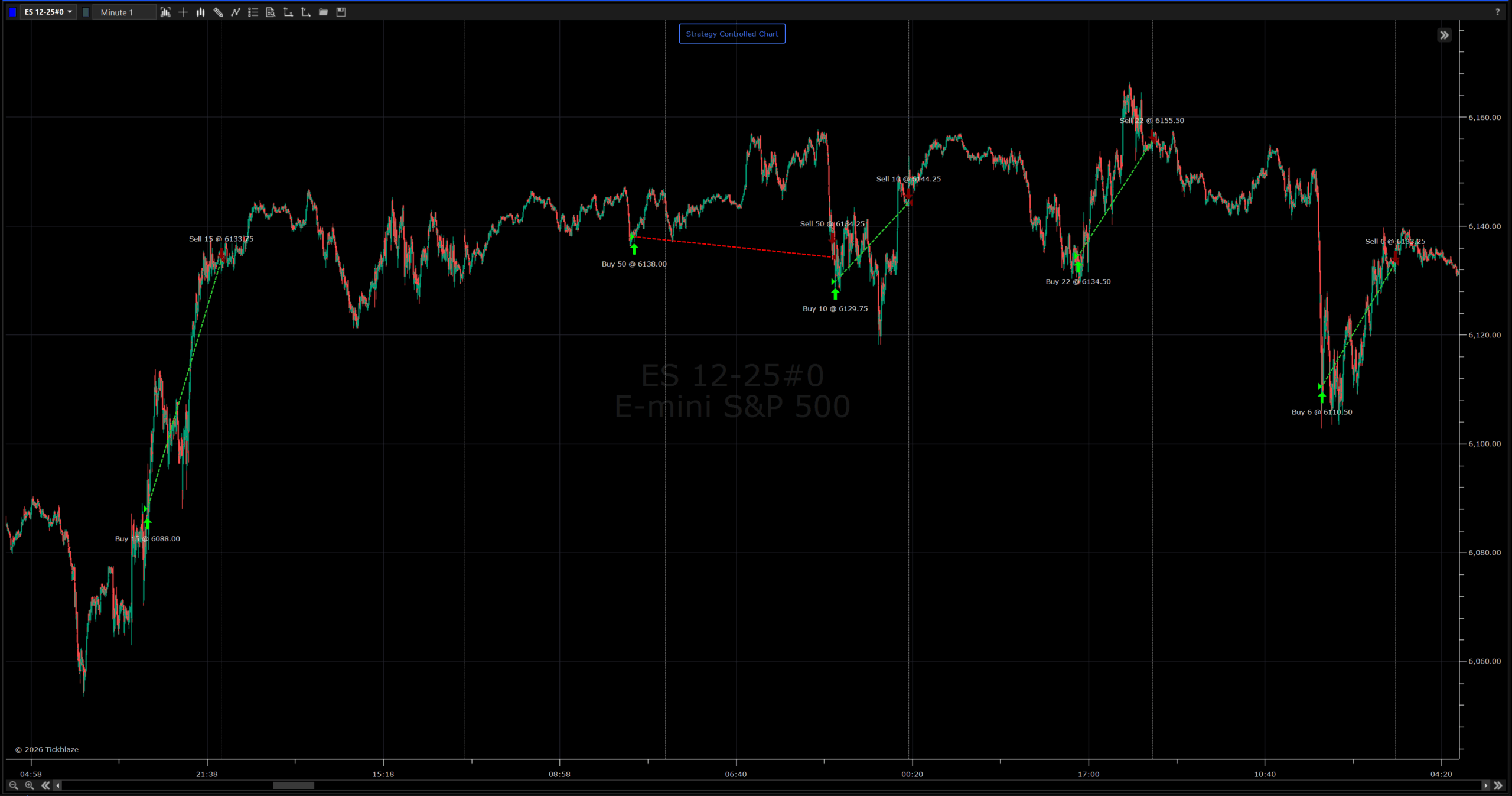

It combines a full-featured graphical environment with an institutional-grade algorithmic engine. The emphasis is not on scripting strategies in isolation, but on managing portfolios of independent strategies across venues and asset classes.

Modern development languages are first-class. Venue neutrality is built in. Backtest-to-live parity is treated as a requirement, not an enhancement. Portfolio-level, multi-strategy backtesting is native rather than simulated through post-processing.

The defining characteristic, however, is operational reliability.

“Institutional” is often used loosely in trading software. Here, it is best understood as predictability under pressure. Fewer edge-case failures. Fewer surprises in live execution. Fewer reasons to override systems because the platform itself is uncertain.

That reliability is what makes a platform usable over years, not just during research phases.

Power versus complexity

One useful way to think about these platforms is along two dimensions.

Power reflects how far a platform can take you: portfolio tooling, asset coverage, execution flexibility, and scalability.

Complexity reflects the cost of using that power: learning curve, setup burden, and ongoing operational overhead.

Some platforms sit low on complexity but cap out quickly. Others offer immense power but require you to become a systems engineer to unlock it. The most practical solutions live in the middle, where serious capability exists without forcing infrastructure ownership as the primary job.

That balance is not accidental. It is the result of design philosophy.

Choosing with eyes open

Every platform discussed here brings something valuable. None are universally “best.”

The mistake is not choosing the wrong platform. The mistake is choosing without understanding the trade-offs you are accepting.

As you move from experimentation into production, from single strategies into portfolios, and from simulated results into real capital, those trade-offs compound.

In the final section, we’ll pull these pieces together and explain how to think about platform choice as a long-term operational decision rather than a one-time software purchase.

Platform | Core Strength | Primary Limitation | Best Suited For |

TradeStation | Extremely mature and beginner-friendly ecosystem | Closed environment, limited scalability for advanced portfolio workflows | Traders getting started who value simplicity over flexibility |

NinjaTrader | C# scripting with strong chart-based development | Desktop-first architecture, weak native portfolio testing | Futures traders blending discretionary and automated trading |

QuantConnect | Institutional-grade engine with broad asset support | Steep learning curve and heavy developer overhead | Quant developers prioritizing flexibility and custom research pipelines |

NautilusTrader | Maximum performance, control, and transparency | High setup complexity, minimal abstraction, no GUI | Advanced engineers building fully custom trading systems |

Tickblaze | Portfolio-first design with backtest-to-live parity | Smaller ecosystem than legacy retail platforms | Serious algo traders seeking power without excessive infrastructure burden |

Part Four: Why This Ultimately Comes Down to Tickblaze

After stripping away marketing claims, feature grids, and personal bias, platform choice usually collapses into a single tension.

If a system is too simple, you outgrow it quickly.

If it is too complex, you spend your time managing infrastructure instead of trading.

Most platforms sit on one side of that divide.

The reason Tickblaze stands out is that it deliberately targets the middle ground where serious trading actually happens. Powerful enough to scale with sophisticated strategies and portfolio-level thinking, but accessible enough that progress is measured in days and weeks, not quarters.

That balance is not accidental. It is the result of designing for how systematic traders actually work over time.

Portfolio-first, not strategy-first

The defining capability is not a single indicator, language choice, or UI element. It is the assumption that real trading happens at the portfolio level.

Most platforms implicitly treat strategies as isolated experiments. You test one idea, examine one equity curve, and repeat. Portfolio behavior is something you reconstruct afterward.

Tickblaze inverts that model. Independent strategies are treated as components of a unified system from the start. Capital allocation, drawdowns, and interaction effects are visible immediately, not discovered later through spreadsheets or custom scripts.

Once you operate this way, it becomes difficult to justify any workflow that forces you back into single-strategy thinking.

Modern foundations matter more than features

The technical foundation of a platform determines what is possible two or three years from now, not just today.

Tickblaze is built on a modern technology stack and supports standard development languages used across the broader software industry. That matters because algorithmic trading is converging with professional software engineering, not diverging from it.

As AI-assisted development becomes normal, platforms aligned with industry-standard languages and tooling gain compounding advantages. The ability to prototype faster, refactor safely, and integrate external tooling becomes a force multiplier rather than a constraint.

Legacy platforms accumulate technical debt quietly. Modern platforms either invest early or fall behind later.

Backtest-to-live parity is a safety feature

One of the most underappreciated risks in systematic trading is the gap between research and production.

Tickblaze treats backtest-to-live parity as non-negotiable. The same code, logic, and structure run in simulation and in production. The only thing that changes is the data source.

This is not just a convenience. It is risk control.

Every divergence between research and live execution introduces untested behavior. Eliminating that gap removes an entire class of failure modes that only appear when real capital is involved.

Visual workflows without sacrificing control

There is a false assumption that serious platforms must abandon visual workflows entirely.

Tickblaze proves otherwise.

Charts, performance outputs, and diagnostics are integrated directly into the research process without turning the platform into a toy. Visual feedback accelerates iteration, especially when running large numbers of tests, while still allowing full programmatic control where needed.

Friction compounds. When insight is one click away instead of one script away, experimentation increases. Over hundreds of iterations, that difference becomes meaningful.

The CLI is about the future, not convenience

The presence of a cross-platform command-line interface is not about avoiding a GUI. It is about extensibility.

A CLI allows research workflows to become programmable. It enables automated testing pipelines, remote execution, and integration with external systems. More importantly, it opens the door to agent-driven research workflows where tools can operate on tools.

This is where systematic trading is heading. Platforms locked behind GUI-only workflows will struggle to participate in that evolution.

Honest limitations, realistic trade-offs

No platform is perfect, and pretending otherwise is a mistake.

Tickblaze does not yet cover every asset class, and its ecosystem is younger than some legacy platforms. There are fewer third-party resources, fewer plugins, and fewer years of accumulated community content.

What matters is trajectory.

Active development, architectural clarity, and a clear focus on portfolio-scale trading tend to outweigh ecosystem size over time. Mature but stagnant platforms age poorly. Focused platforms with momentum tend to close gaps quickly.

Productivity is an edge

There is one final factor that is difficult to quantify but impossible to ignore.

When tools reduce friction, traders test more ideas. They iterate faster. They stay engaged longer. Momentum builds.

When tools fight back, motivation erodes. Progress slows. Strategy development turns into infrastructure maintenance.

Over hundreds of hours, that difference compounds into a real edge.

The bottom line

The preference for Tickblaze is not about brand loyalty or novelty. It is about alignment.

Alignment with how systematic trading actually scales.

Alignment with modern development practices.

Alignment with portfolio-level thinking and operational reliability.

For traders who want to move beyond experimentation and build durable, repeatable processes around real capital, that alignment matters more than any individual feature.

That is why, after examining the landscape honestly, this is where the line ultimately lands.

Part Five: Final Thoughts

Choosing an algorithmic trading platform is not about chasing features or following trends. It is about deciding how you want to work, how you want to scale, and how much friction you are willing to tolerate between an idea and real-world execution.

The platforms that endure are not the ones with the loudest marketing or the longest feature lists. They are the ones that quietly support disciplined research, realistic testing, portfolio-level thinking, and reliable execution when capital is on the line.

That is ultimately why we continue to build on and around Tickblaze.

Not because it tries to be everything to everyone, but because it aligns with how serious algorithmic trading actually evolves. From research to validation. From single strategies to portfolios. From experimentation to production. From theory to real capital.

If you are looking for a platform that reduces noise, respects reality, and gives you room to grow without forcing you to rebuild your workflow every step of the way, this is where we believe that journey should start.

This series is based on text originally written by Petr Žůrek (Algotrading with Petr) for his upcoming course Algotrading Launchpad, created in collaboration with Tickblaze. We believe it will be a valuable resource for traders looking to approach algorithmic trading with rigor, discipline, and realism. Get early access / updates here: Algotrading Launchpad.

If you’re ready to move beyond experimentation and build strategies on infrastructure designed for the long term, explore what’s possible with Tickblaze.